July 2025 has been an absolute blockbuster month for HeyGen users. Between upgraded avatars that actually look human, voice engines that sound eerily natural, and an AI agent that practically edits videos for you, the platform just leveled up in ways that’ll make your content creation workflow feel like pure magic. Let’s dive into what’s new, what’s improved, and why your video game is about to get significantly stronger.

What is it? Avatar IV received its most significant update since launch, introducing script-based dynamic gestures, prompt-controlled movement, and micro-expressions that respond naturally to your content. Videos can now run up to 60 seconds in crisp 1080p resolution. [Source: HeyGen Community]

Designed to do: Transform static talking heads into expressive, lifelike presenters that gesture naturally while speaking. Whether you’re explaining a concept, selling a product, or telling a story, your avatar now moves and reacts like a real person would.

How it improves HeyGen: The upgrade addresses the biggest complaint about AI avatars—they felt robotic and lifeless. Now when your script mentions “three key points,” your avatar can actually hold up three fingers. Users report engagement increases of 40-60% compared to static avatars. [Source: HeyGen Product Updates]

- Script-Based Gestures: Avatars automatically gesture based on your content

- Custom Motion Prompts: Direct specific movements like “waving” or “pointing”

- Extended Video Length: Create longer, more comprehensive content

- Enhanced Realism: Every blink, shift, and expression feels authentically human

What is it? HeyGen introduced Fish, a premium voice engine that delivers unprecedented naturalness and emotion in AI-generated speech. It excels at voice clone similarity, accent retention, and reducing that telltale “robotic” quality that plagued earlier AI voices. [Source: HeyGen Voice Engine Guide]

Designed to do: Solve the three biggest voice challenges users faced: monotone delivery, poor voice matching, and lost accents. Fish specifically targets creators who need their AI voice to sound authentically like themselves or maintain regional dialects.

User experience improvement: Internal evaluations show Fish dramatically outperforms previous engines in similarity scores. Users with strong accents—from Southern American to British to Australian—report their cloned voices finally sound “like them” rather than a generic approximation.

“Fish is a game-changer for maintaining accent authenticity. My Scottish accent finally comes through properly instead of sounding like generic AI.” — Community user feedback

What is it? Upload product photos and watch HeyGen avatars naturally interact with your items in generated videos. From clothing and accessories to tech gadgets and food products, avatars can wear, hold, or demonstrate virtually anything. [Source: HeyGen Product Placement Guide]

Designed to do: Create authentic product demonstrations and UGC-style ads without hiring models, renting studios, or organizing photo shoots. Perfect for e-commerce brands, startups with limited budgets, or rapid campaign testing.

Real-world impact: Brands report cutting video production costs by 70-80% while increasing output volume by 500%. One clothing retailer generated 50 different product videos in a single afternoon that previously would have required weeks of coordination and thousands in production costs. [Source: PWR AI Tools]

- Upload Multiple Angles: Provide various product shots for better accuracy

- Context-Aware Placement: Products appear naturally within realistic scenarios

- Powered by Avatar IV: Hyper-realistic gestures and lip-sync

- Instant UGC Creation: Generate scroll-stopping social media content

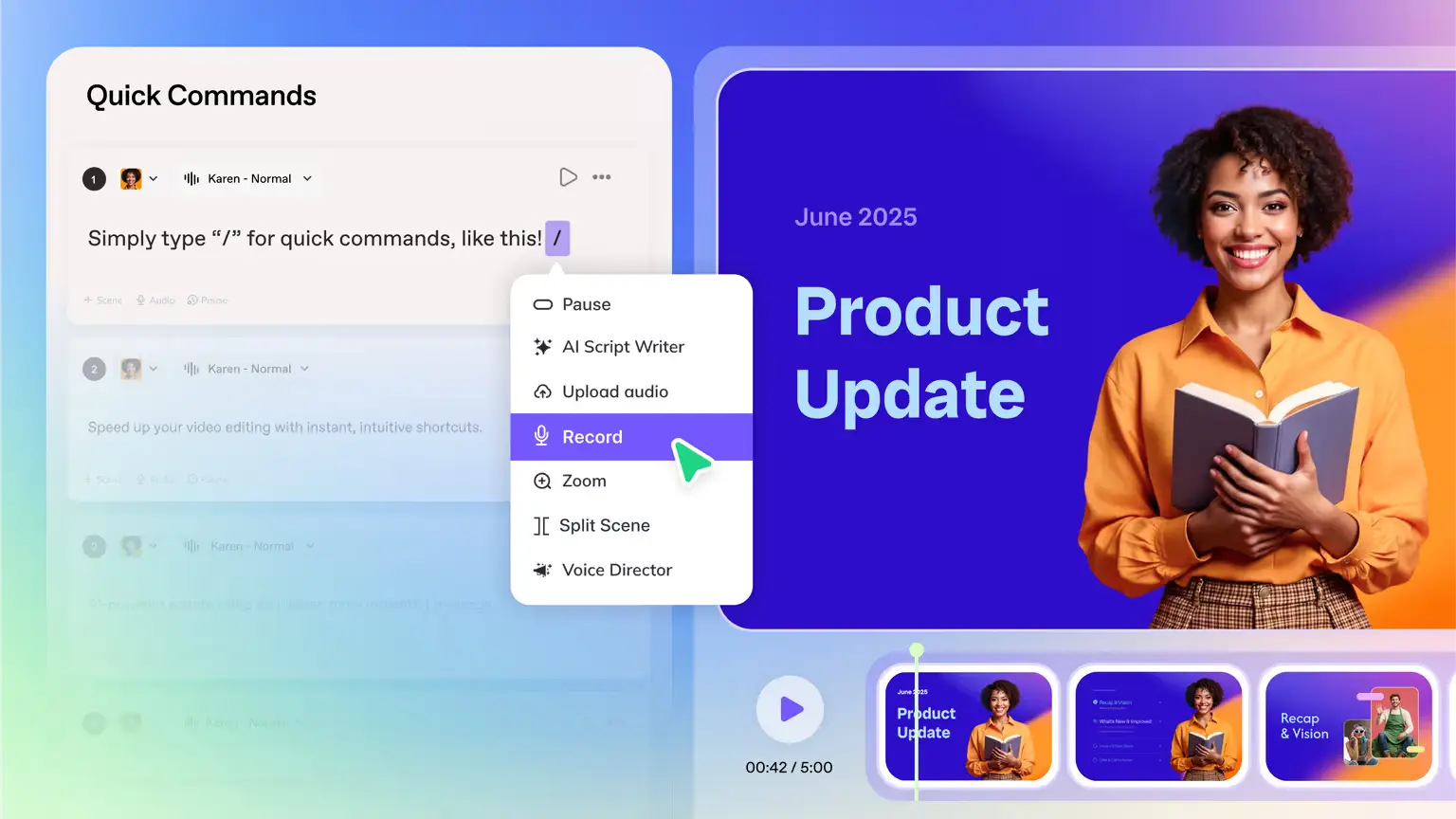

What is it? Voice Director gives creators granular control over how their avatar delivers every word. Using natural language prompts like “emphasize this” or “sound more excited,” users can shape tone, pacing, and emotion to match their message perfectly. [Source: HeyGen Blog]

Designed to do: Bridge the gap between robotic text-to-speech and authentic human delivery. Whether you need professional corporate tone, casual conversational style, or energetic sales presentation, Voice Director ensures your message lands exactly as intended.

Integration benefits: When paired with Avatar IV’s Expressive Avatars, Voice Director creates a feedback loop where vocal emotion automatically influences facial expressions and body language, resulting in cohesive, believable performances.

What is it? HeyGen integrated Google’s Veo model into their “Add Motion” feature, enabling dynamic, action-focused video clips with full-body movement and realistic background motion. Perfect for fitness content, dance videos, or any scenario requiring authentic physical activity. [Source: Creative Pad Media]

Designed to do: Solve the static avatar problem for content requiring movement. Instead of talking heads, creators can now generate avatars lifting weights, dancing, cooking, or engaging in virtually any physical activity with realistic motion physics.

Creative expansion: This opens entirely new content categories previously impossible with AI avatars. Fitness instructors, cooking channels, dance tutorials, and product demonstrations requiring physical interaction all become feasible without human performers.

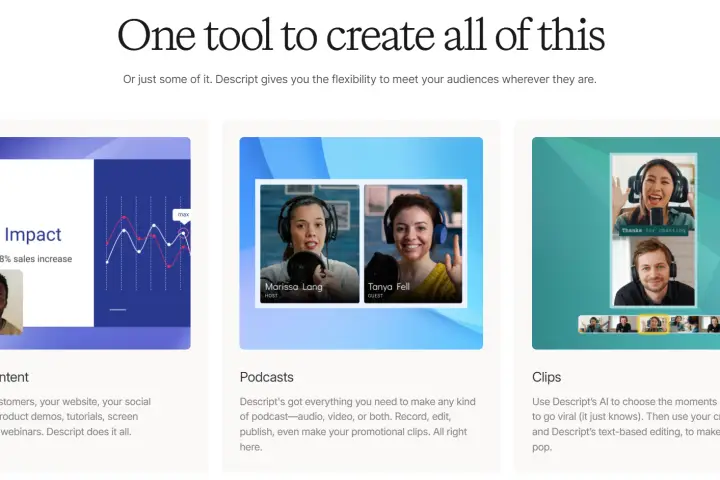

What is it? HeyGen’s most ambitious project yet—a creative AI agent that functions as an entire production team. Input a single prompt like “explain bitcoin to my grandma in a gameshow style” and receive a complete, publish-ready video with script, visuals, voiceover, editing, and transitions. [Source: HeyGen Video Agent]

Designed to do: Eliminate the traditional video production pipeline entirely. No more scriptwriting, asset hunting, voice recording, or timeline editing. The Video Agent handles creative decisions, story structure, pacing, and technical execution autonomously.

Revolutionary impact: Early waitlist feedback suggests this could compress weeks of traditional video work into minutes. Over 57,000 users have already signed up for early access, indicating massive demand for fully automated content creation. [Source: DataNorth AI]

“Video Agent represents the first true ‘prompt-native creative engine’—it’s not a tool or copilot, it’s a creative agent doing the work for you.” — HeyGen Product Team

What is it? Gesture Control allows creators to assign specific movements—thumbs up, pointing, nodding, even background actions like pets walking by—to exact words or moments in their script. Each gesture syncs perfectly with the intended timing. [Source: HeyGen Help Center]

Designed to do: Add personality, emphasis, and natural human behavior to avatar performances. Instead of generic talking heads, avatars can now react, gesture, and move in sync with their content, creating more engaging and relatable presentations.

Technical requirements: Works exclusively with Hyper-Realistic Avatars created after June 15, 2025. Requires specific filming structure: 30 seconds of neutral footage followed by gesture recording every 2 seconds, with return to neutral pose between movements. [Source: HeyGen Community Guide]

- Automatic Detection: AI identifies and suggests gesture placements

- Manual Fine-Tuning: Precise timing control for perfect synchronization

- Scene Integration: Gestures trigger natural scene cuts for smooth flow

- Beyond Hand Movements: Supports facial expressions and background elements

The July updates have generated significant buzz in HeyGen’s community, with creators sharing impressive results and practical applications across industries. [Source: HeyGen Community Spotlights]

“Avatar IV’s gesture control completely changed how I create educational content. My students actually pay attention now because the avatar looks like a real teacher, not a talking statue.” — Sarah M., Online Educator

“Product Placement dropped last week and our community knows how to make an ad. All it takes: 1 product photo + 1 avatar.” — HeyGen Official Twitter

“Fish voice engine finally made my AI clone sound like me instead of my robotic cousin. The accent retention is incredible.” — Marcus R., Content Creator

These July updates collectively address the three biggest pain points in AI video creation: authenticity, efficiency, and creative control. The combination of natural gestures, realistic voices, and automated production creates a tipping point where AI-generated content becomes genuinely compelling rather than obviously artificial. [Source: HeyGen June 2025 Release Notes]

- Production Speed: Complete videos in minutes rather than hours or days

- Cost Reduction: Eliminate actor fees, studio rentals, and extensive editing time

- Creative Freedom: Test multiple concepts, styles, and approaches instantly

- Scalability: Generate personalized content for different audiences simultaneously

- Consistency: Maintain brand voice and visual style across all content

HeyGen’s July 2025 updates represent more than incremental improvements—they signal a fundamental shift in how video content gets created. When AI avatars move naturally, speak authentically, and integrate seamlessly with products and environments, the line between “AI-generated” and “professionally produced” begins to blur significantly. [Source: Sidecar AI Analysis]

For creators, marketers, and businesses, these tools democratize high-quality video production in unprecedented ways. Small businesses can now compete with major brands in content quality and volume. Individual creators can scale their output without proportional increases in time or budget. Enterprise teams can localize and personalize content across markets and demographics efficiently.

The pending Video Agent launch promises to push this transformation even further, potentially making traditional video production workflows obsolete for many use cases. As one community member noted, “We’re watching the transition from video creation as craft to video creation as conversation.”

July 2025 will likely be remembered as the month AI video generation truly came of age—not just as a novelty or cost-cutting measure, but as a legitimate creative medium capable of authentic, engaging storytelling. The future of content creation isn’t just automated; it’s genuinely intelligent.